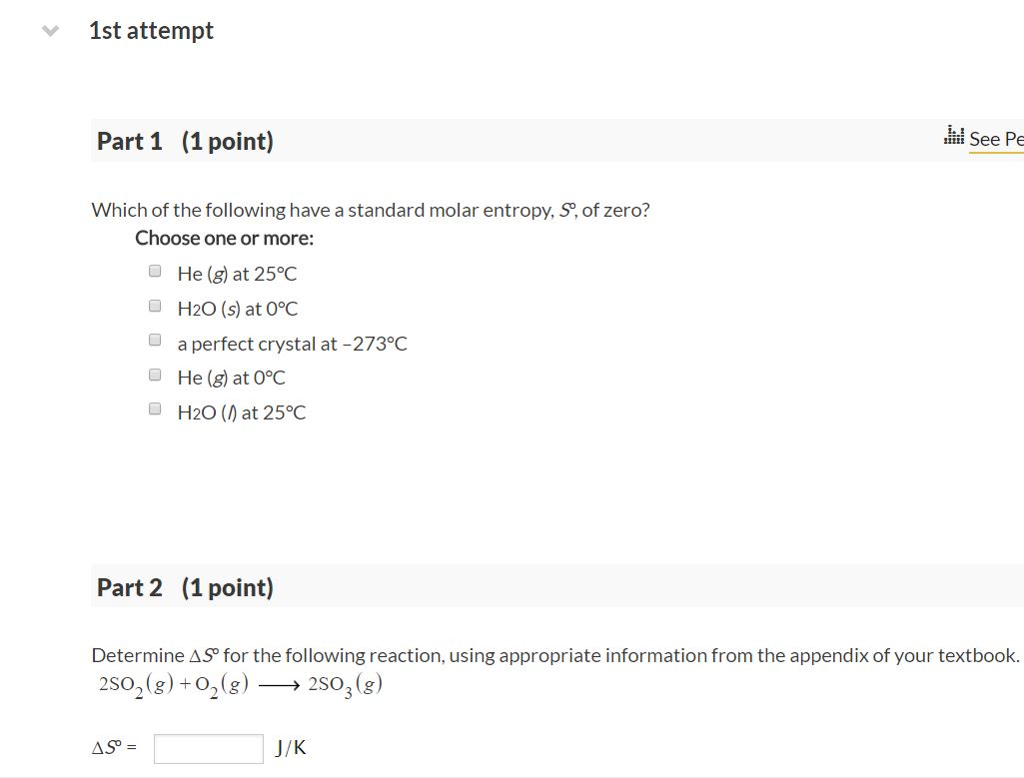

If it is zero there is no change in entropy w.r.to previous state and the process can be stated as reversible process. Change in Entropy must be greater or equals to zero. Given the discrete random variable X ",input,infoC ) Answer (1 of 11): Entropy is nothing but disorderness of molecules. TaskĬalculate the Shannon entropy H of a given input string.

Actually, the differential entropy of a constant variable (which would correspond to a Dirac delta density) is $-\infty$.You are encouraged to solve this task according to the task description, using any language you may know. But (because the differential entropy is not the Shannon entropy) that means nothing special - in particular, it does not mean that the variable has no uncertainty -like a constant. the base 2 for the calculation of entropy. So, yes, the differential entropy of your variable is zero.

It's not a true entropy (it can be zero or negative), and among other things it depends on the scale (so, say, the -differential- entropy of the height of the humans gives different values if I measure them in centimeters or in inches - which is rather ridiculous). The differential entropy $h(X)$ is another thing. Indeed, in a real number on the interval $$ I can code all the information of the wikipedia, and more. A random number uniformly distributed on $$ (actually, in any interval of positive measure) has infinite entropy - if we are speaking of the Shannon entropy $H(X)$, which corresponds to the average information content of each ocurrence of the variable.

0 kommentar(er)

0 kommentar(er)